Measures of Effective Teaching (MET) Longitudinal Database Guide

![]()

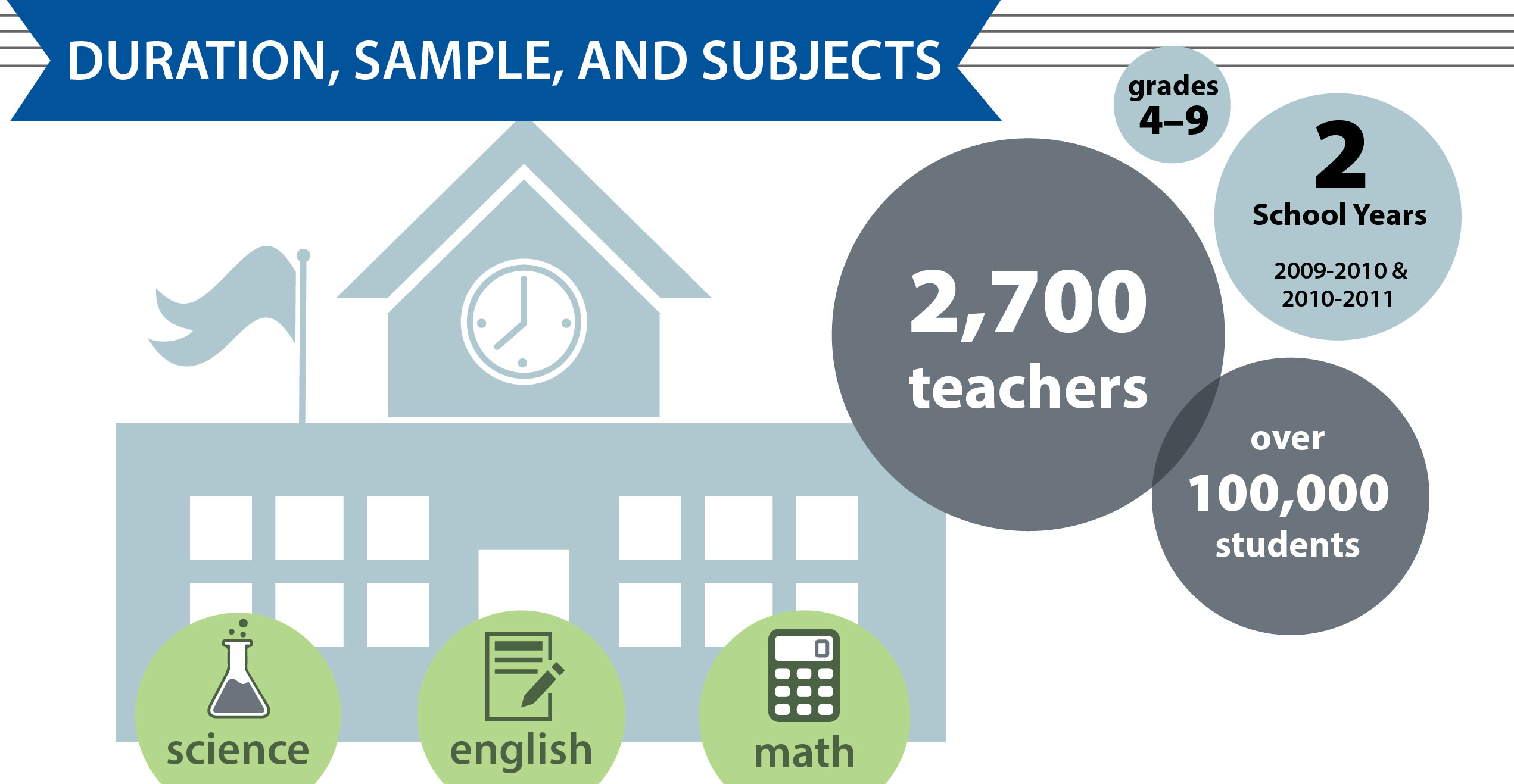

The Measures of Effective Teaching (MET) project, funded by the Bill & Melinda Gates Foundation, stands as the largest study of classroom teaching ever conducted in the U.S., involving over 2,500 teachers across 317 schools in six major school districts during the 2009–10 and 2010–11 academic years.

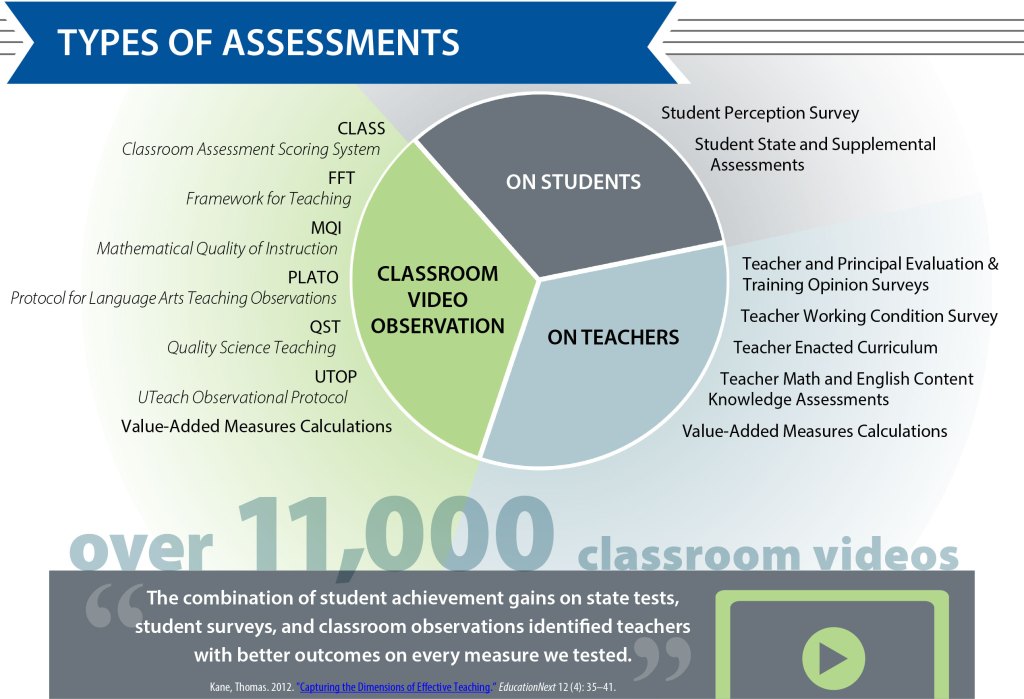

Designed to build and test multiple measures of teaching effectiveness, the MET project aimed to determine how evaluation methods could best inform teachers and help districts cultivate and identify excellent teaching. Comprehensive data—including student achievement, surveys, classroom video, content knowledge assessments, and administrative information—were gathered to investigate the reliability and validity of these measures, the attributes of high-quality instruction, and how multiple measures could inform teacher development and evaluation.

Research from MET underscores that teacher quality is the most significant in-school factor affecting student success, with greater variation within schools than between them. By integrating a range of metrics, MET demonstrated that combining multiple, reliable measures provides a clearer, actionable picture of teaching effectiveness, facilitating better feedback, professional development, hiring, and tenure decisions.

Access to the MET LDB Database is restricted and available only to approved researchers via the ICPSR Virtual Data Enclave (VDE). To gain access, researchers must submit an application, provide IRB approval or exemption documentation, and complete a Restricted Data Use Agreement (RDUA), signed by both the Principal Investigator and their institutional representative. For detailed steps, see the “Accessing MET Data in the VDE” section below.

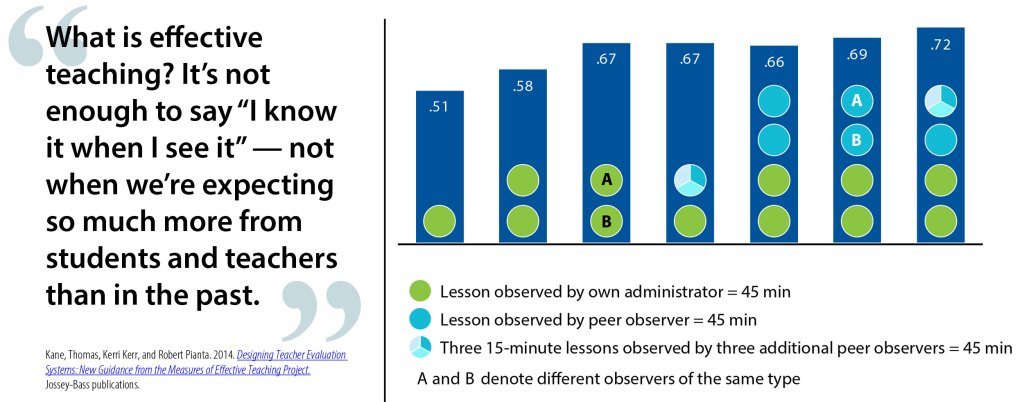

Reliability of Classroom Observation Methods

Types of Assessments

Accessing MET Data in the Virtual Data Enclave

1. Submit Application

Note: collaborations require separate Restricted Data Use Agreements for each institution.

On a MET study homepage, click “Access Restricted Data” to enter VDE Manage, the online system where you can submit your application. The application requires:

- Principal Investigator (PI) information (PI must hold a PhD or terminal degree, and a faculty or research position.)

- Abstract

- Research Description (In 700 words or less)

- The research questions, problems, or issues your research will address

- A description of the data you plan to use in your research

- IMPORTANT: If you plan to use the District-Wide Files, you must provide an explanation of how your research will contribute to building and testing measures of effective teaching, and how it will help school districts identify and develop great teaching.

- Restricted data selections

![]()

2. Receive Confirmation & Download an RDUA

ICPSR will email you a confirmation when your application is received, including a link to download an RDUA.

![]()

3. Email Required Documents

To complete your application, email icpsr-help@umich.edu your signed RDUA (signed by PI and a PI’s institutional representative) and IRB determination.

![]()

4. Application Review and Approval

ICPSR reviews and approves your application. The application can be sent back to the applicant if further work is necessary. Note: since the agreement for MET data requires ORSP countersignature, approval may be delayed until ICPSR receives the fully executed agreement from ORSP. This process can take two weeks or longer.

![]()

5. Purchase License(s)

Return to your application to purchase annual VDE licenses for your research staff.

![]()

6. Assign Licenses

Return to your application to assign purchased VDE license(s) to your research staff.

![]()

7. Complete VDE Training

All team members must watch a VDE training video and complete a quiz.

- Watch the ICPSR VDE Training video

- Complete the VDE Training Quiz. If you answered any of the quiz questions incorrectly, please rewatch the video and consult the VDE documentation.

![]()

8. Email Your Quiz Score

Email icpsr-help@umich.edu to notify ICPSR staff that you have completed the VDE training and passed the quiz.In your email, include your name, VDE Project number, and the e-mail address you provided in the training quiz so that we can verify your training completion.

![]()

9. VDE Set Up

ICPSR sets up user accounts for your research staff and creates a space for your project in the VDE.

![]()

10. Receive Instructions for Access

Instructions on activating a Duo Two-Factor Authentication account, downloading the VMWare software (Omnissa in Windows) needed for accessing the VDE Management System, and logging in and navigating within the VDE Management System.

![]()

11. Access MET Data

Access your project’s folder in the VDE containing the requested restricted data.

Restrictions on District Identification and Comparison

- The MET data were not designed to be representative of teachers within each district; therefore, comparisons across districts are not scientifically valid. Public identification of districts increases the risk of disclosing individual teacher or student identities and may result in misleading comparisons. Analytical models should account for district-level differences, but no conclusions about district differences should be drawn from these data. In all publications, district names must be masked and should not appear in tables or text, except in statements listing all participating districts in the MET project.

Permitted Uses of District-Wide Files

- All of the data files and videos are consented by parents and teachers for secondary analysis except the District-Wide Files. The District-Wide Files are a collection of administrative data provided to approved researchers in accordance with the Family Educational Rights and Privacy Act (FERPA), which stipulates that student record data may be distributed for secondary analysis only for the purposes of education research. Please see FERPA §99.31 for further information on appropriate uses of student administrative records. All MET LDB Data Use Agreement applications will be evaluated to ensure that the proposed use of the District-Wide Files, if applicable, complies with FERPA requirements and falls within the scope of the goals of the original MET Project: to build and test measures of effective teaching and to help school districts identify and develop great teaching.

MET Design

MET Longitudinal Database data are organized into multiple ICPSR studies to clarify relationships among diverse data files. Three primary sample sets are included: the full MET sample (all teachers and students), the randomization sample (drawn from the full sample), and the district-wide census (the source of the full sample). Data are available at six levels of analysis: student, teacher, class/section, school, video observation segment, and survey or item-level. Learn more about the MET study organization (pdf).

MET Sampling

- District Recruitment:

From July to November 2009, districts were selected based on interest, size, central office support, willingness to participate, and local political or union backing. The participating districts were: Charlotte-Mecklenburg (NC), Dallas (TX), Denver (CO), Hillsborough County (FL), Memphis (TN), and New York City (NY). - School Recruitment:

Excluded from participation were special education, alternative, community, autonomous dropout/pregnancy, returning education, and vocational schools not teaching academic subjects, as well as schools with team teaching that prevented assignment of student learning to individual teachers. - Teacher Recruitment:

All teachers in selected grade/subject combinations were invited unless they engaged in team teaching, planned to leave or change subjects the following year, or if there were fewer than two teachers in a grade/subject for exchange group randomization in Year Two. - Realized Samples:

In Year One of the study, a total of 2,741 teachers in 317 schools took part in the MET Study, distributed across grade/subject groupings as indicated in the table below. By contrast, the Year Two sample includes just 2,086 teachers in 310 schools. The table below shows the realized sample sizes for the MET teacher samples, for both years of the study. The Year One sample (left hand column) shows all teachers who participated in Year One of the study, regardless of their eligibility for the randomization that took place in Year Two. The Year Two sample (right hand column)shows the number of teachers who participated in Year Two of the study broken out by their randomization status.

| Year One MET Teacher Sample vs. MET LDB Core Teacher Sample by Focal Grade/Subject | ||||

|---|---|---|---|---|

|

Full Sample All Year One Teachers (AY 2009-2010) |

Core Study Sample All Teachers Present in Year Two (AY 2009-2010) |

|||

| Randomized | Non-Randomized | |||

| 4th and 5th Grade English/Language Arts (ELA) | 138 | 98 | 29 | |

| 4th and 5th Grade Mathematics | 102 | 67 | 31 | |

| 4th and 5th Grade ELA and Mathematics | 634 | 305 | 52 | |

| Grades 6-8 ELA | 606 | 292 | 139 | |

| Grades 6-8 Mathematics | 528 | 282 | 120 | |

| Grades 6-8 ELA and Mathematics | 18 | 4 | 4 | |

| 9th Grade Algebra I | 233 | 116 | 44 | |

| 9th Grade English | 242 | 108 | 48 | |

| 9th Grade Biology | 240 | 103 | 60 | |

Instruments, Measures, and Analysis

The MET project assessed teaching effectiveness using multiple measures:

- Student achievement: State and supplemental test scores; value-added models estimating teacher impact.

- Classroom observations: Annual video recordings scored using six protocols (CLASS, FFT, MQI, PLATO, QST).

- Teacher assessments: Tests of pedagogical content knowledge.

- Surveys: Student surveys on classroom experience; teacher surveys on working conditions and support.

Randomization

Teachers were organized into “exchange groups” by grade and subject. Rosters in participating schools were randomly assigned to eligible teachers for Year Two, enabling causal analysis of teaching interventions.

Video Observation Scoring

Video lessons were scored in pilot and subsequent phases using different observation protocols and web-based tools, with scoring focused on teachers with complete and randomized data.

For more information on phases of video observation scoring see Chapter 10 of the User Guide (pdf).

ICPSR has organized the MET LDB data into a series of eight studies, each of which has an ICPSR “Study Number.” Use the file organization chart (pdf) to understand the file organization of MET data. For each file in the MET Longitudinal Database (LDB), the chart lists the file name, its associated ICPSR study name and number, and the primary IDs included for merging or linking data within or across studies. Files are provided in SPSS, SAS, Stata, R, and ASCII formats. The ASCII file name (e.g., da34771-0001.txt) appears in the chart; other formats use the same naming convention with different extensions (e.g., .sav, .rda).

MET Studies:

- Study Information (ICPSR 34771)

Includes video information, randomization data (teacher and student assignments), a subject ID crosswalk, and teacher demographics (gender, ethnicity, experience, education). - Core Files, 2009-2011 (ICPSR 34414)

Core longitudinal data files for teachers who participated in Year 1 or both years; includes only aggregated/summarized variables and excludes specialty instruments. - Base Data: Section-Level Analytical Files, 2009-2011 (ICPSR 34309)

Section-level analytical files combining demographics, test scores, value-added measures, and student survey results, aggregated at the teacher section level. - Base Data: Item-Level Supplemental Test Files, 2009-2011 (ICPSR 34868)

Item-level files for the three supplemental achievement tests (SAT-9, BAM, ACT) administered to students in both years of the MET study. - Base Data: Item-Level Observational Scores, 2009-2011 (ICPSR 34346)

Item-level scores from classroom observation protocols (CLASS, FFT, MQI, PLATO, QST, UTOP), based on video recordings and teacher commentary, across both study years. - Base Data: Item-Level Surveys and Assessment Teacher Files, 2009-2011 (ICPSR 34345)

Files containing teacher, principal, and student surveys, teacher knowledge assessments, and curriculum surveys, focused on evaluating perceptions and predicting teacher effectiveness. - District-Wide Files, 2008-2014 (ICPSR 34798)

District-wide files with student demographics, specialty status (e.g., free lunch, ELL, gifted), test scores, and teacher-level aggregates across study years and the prior year. - Observation Score Calibration and Validation, 2011 (ICPSR 37090)

Data on the consistency of classroom observation scoring, including rater validation outcomes and retraining frequency for MET project items.

The MET LDB project includes one of the largest collections of classroom videos in the U.S.—approximately 12,000 sessions from grades 4–9. The initial set, collected during the 2009–10 and 2010–11 academic years, involved nearly 3,000 volunteer teachers from 317 schools in six urban districts. Each video captures a full class period, and participating teachers contributed multiple recordings each year. These videos are linked with rich survey and instructional data, allowing for in-depth analysis of classroom practices and environments. Due to consent restrictions, these videos may only be used for research purposes.

Approved researchers may request access to MET video files through the secure MET Video Player, which streams videos directly to your computer via a web browser (outside of the VDE). The player offers a categorized, filterable list of uniquely identified videos for selection and viewing. Each video in the Observation Session List in the MET Video Player is associated with a four-character alphanumeric Session ID, which can be cross-referenced with the MET data files.

The MET Extension Project (MET-X) adds about 2,000 more classroom videos from 2011–12 and 2012–13, with broader consent allowing use for both research and teacher training. However, MET-X videos lack associated metadata and cannot be linked to quantitative data about teachers, students, or schools.

Restricted Access to MET Classroom Videos in the MET Video Player

These additional documentation files are a sample of the web-based rater training courses and video scoring guides used to instruct video observation raters for a selection of the video observation scoring measures. This material is provided for the purpose of research and documentation of the Measures of Effective Teaching Longitudinal Database and is not to be used for other purposes. Please be advised that these materials may contain broken links to material no longer available.

Framework for Teaching

MET Rater Training: CLASS Training

- Analysis and Problem Solving Cheat Sheet

- Behavior Management Cheat Sheet

- Content Understanding Cheat Sheet

- Instructional Dialogue Cheat Sheet

- Instructional Learning Formats Cheat Sheet

- Negative Climate Cheat Sheet

- Positive Climate Cheat Sheet

- Productivity Cheat Sheet

- Quality of Feedback Cheat Sheet

- Regard for Adolescent Perspectives Cheat Sheet

- Regard for Student PerspectivesCheat Sheet

- Student Engagement Cheat Sheet

- Teacher Sensitivity Cheat Sheet

- Scoring Leader Training: CLASS Secondary

CMQI Lite

Quality Science Teaching Scales

- Assigns Tasks to Promote Learning and Addresses the Task Demands

- Demonstrates Content Knowledge

- Elicits Evidence of Students’ Knowledge and Conceptual Understanding

- Guides Analysis and Interpretation of Data

- Promotes Students’ Interest and Motivation to Learn Science

- Provides Feedback For Learning

- Provides Guidelines for Conducting the Investigation and Gathering Data

- Sets the Context and Focuses Learning on Key Science Concepts

- Uses Modes of Teaching Science Concepts

- Uses Representations

MET Project Instrument Descriptions

- Teacher Instruments

- Teachers’ Perceptions and the MET Project (pdf) (Teacher Working Conditions Survey)

- Content Knowledge for Teaching and the MET Project (pdf) (Content Knowledge for Teaching Assessment – CKT)

- Classroom Observation Instruments

- The CLASS Protocol for Classroom Observations (pdf) (The Classroom Assessment Scoring System – CLASS)

- Danielson’s Framework for Teaching for Classroom Observations (pdf) (Framework for Teaching – FFT)

- The MQI Protocol for Classroom Observations (pdf) (Mathematical Quality of Instruction – MQI lite)

- The PLATO Protocol for Classroom Observations (pdf) (Protocol for Language Arts Teaching Observation – PLATO Prime)

- Student Instruments

- Student Assessments and the MET Project (pdf) (Student Supplemental Assessments)

ICPSR has prepared a number of video tutorials about MET data available on YouTube. In addition, the American Educational Research Association and MET LDB staff prepared an introductory video on MET (site requires you create a free account).

MET Early Career Grants Webinars

An introduction for potential grantees to the scope of the MET project and the data collected. The grants program has ended, but the recordings are provided here for informational purposes.

- Measures of Effective Teaching Early Career Grants Program, Part 1

- Measures of Effective Teaching Early Career Grants Program, Part 2

- Measures of Effective Teaching Early Career Grants Program, Part 3

Using the MET LDB

A webinar series offering descriptions and discussion of various facets of the MET LDB.

- Using the MET LDB Video Data: Access, scoring, and linking

- Random Assignment in the MET LDB

- MET Early Career Grantees: Research Projects Underway and Preliminary Findings

- Video Data Within the MET LDB: Video Capture, Scoring Protocols, and Measures Used

MET LDB Lecture Series

A series of three lectures providing in-depth discussion of complex facets of the MET LDB, given by faculty with experience using the database for secondary analysis.

- Understanding the Nested Data Structure, Elizabeth Minor, National Luis University

- Implications of Using the Nested Data, Ben Kelcey, University of Cincinnati

- Using the Randomized Sample, Matthew Steinberg, University of Pennsylvania